Scalable, Adaptive, and Resilient Autonomy (SARA) CRA

SARA CRA Overview

Purpose

- Enable Autonomous Maneuver in complex and contested environments.

- Develop fundamental understanding and inform the art-of-the-possible for Scalable, Adaptive, and Resilient Autonomy.

- Improve air and ground based autonomous vehicle perception, learning, reasoning, communication, navigation, and physical capabilities.

- Realize adaptive and resilient Intelligent Systems that can reason about the environment, work in distributed and collaborative heterogeneous teams, and make decisions operational tempo.

Approach

- A series of technology sprint topics executed in annual program cycles integrated with ARL Essential Research Programs (ERPs).

- Integrate state-of-the-art solutions from leading Institutions and PIs into government-owned autonomous testbeds and autonomy stack.

- Comprehensive and cumulative capability exploring and experimentally demonstrating the art-of-the-possible in scalable, adaptive, and resilient autonomy.

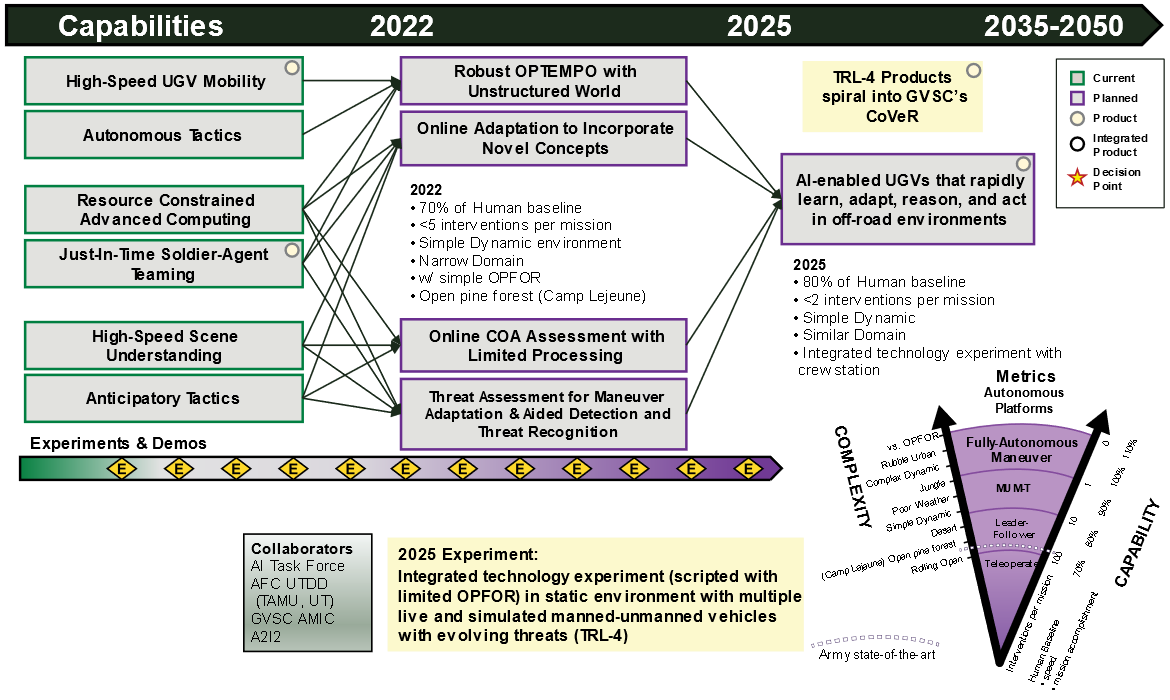

Cycle 1 Technology Sprint Topic: Off-Road Autonomous Maneuver

- How to increase the operational tempo and mobility of autonomous systems to traverse increasingly complex off-road environments.

- Aligned with the Artificial Intelligence for Maneuver and Mobility (AIMM) ERP focus and experimentation for high-speed unmanned ground vehicle (UGV).

Cycle 2 Technology Sprint Topic: Autonomous Complex Terrain Maneuver

- Extend beyond the Cycle #1 topics to explore novel methods for increasing the complexity of the planning, decision-making, and traversable physical environments for off-road maneuver of Army autonomous systems

- Intelligence and path planning capability over long distances, long times scales, varying terrains and surfaces

Products

- Novel methods for all-terrain ground and aerial maneuver, to interact with and move through complex environments.

- Methods for scalable and heterogeneous behaviors in support of collaborative air and ground manned-unmanned teaming (MUM-T) operations.

- Techniques for improved perception, decision making, and adaptive behaviors for fully-autonomous maneuver in contested environments.

- Methods, metrics, and tools to facilitate, simulate, and enable evaluation, verification, and validation of emerging approaches for intelligent and autonomous systems under Army relevant constraints and environments.

- Experimental testbeds to develop and refine knowledge products to inform and transition technology to Army stakeholders.

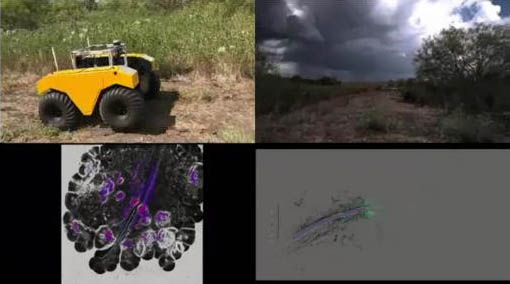

ARL Autonomy Stack implemented on Husky platform

An outcome of Robotics Collaborative Technology Alliance (RCTA)

ARL Autonomy Stack implemented on Warthog platform

Warthog UGV @ Texas A&M University RELLIS Campus

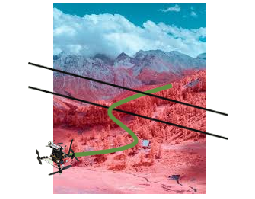

ARL air Autonomy Stack implemented on mission-driven Small UAS

UAS auto-landing on moving Warthog UGV @ APG

Army awards nearly $3M to push research boundaries in off-road autonomy

“The SARA program will help us accelerate our research in autonomous vehicles by including best of breed performers who will augment the capabilities of our core software, enabling future combat vehicles to operate in complex environments,” said Dr. John Fossaceca, acting AIMM ERP program manager at the lab.

“Robotic and autonomous systems need the ability to enter into an unfamiliar area, without the ability to communicate and for which there are no maps showing terrain or structures, make sense of the environment, and perform safely and effectively at the Army’s operational tempo,” said Eric Spero, SARA program manager.

Partners will work in close collaboration with each other and the lab to further develop and then integrate their solutions onto representative testbed platforms, and into the lab’s autonomous systems software repository, for collaboration across the Army Futures Command and Army autonomy enterprise. Disciplined research experimentation will then verify and validate both expected and new behaviors, Spero said.

DEVCOM Army Research Laboratory Office of Strategic Communications

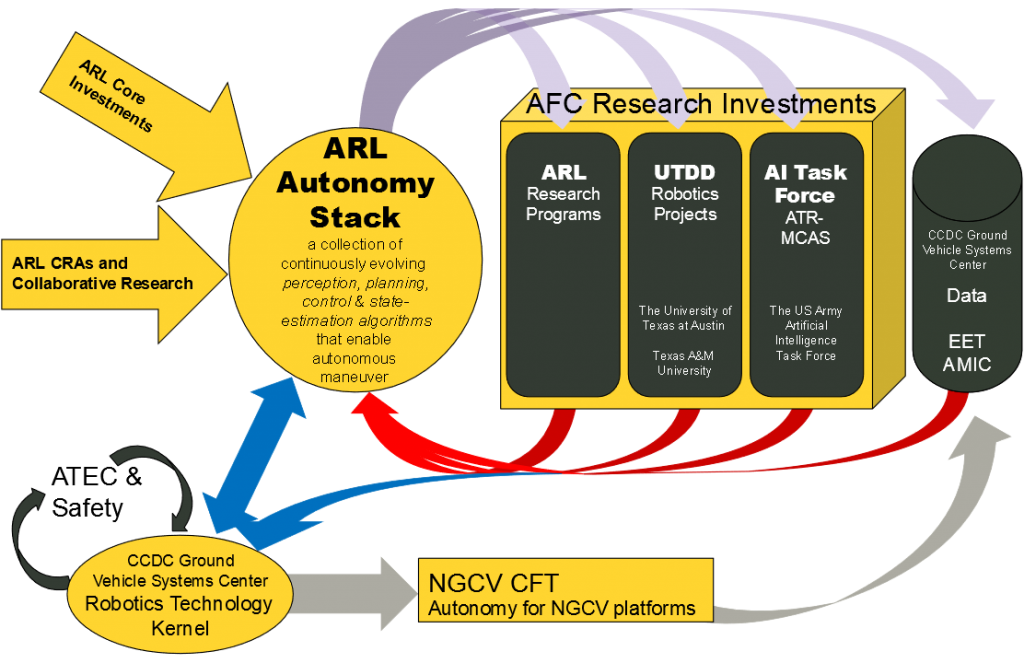

ARL Autonomy Stack

Architecture

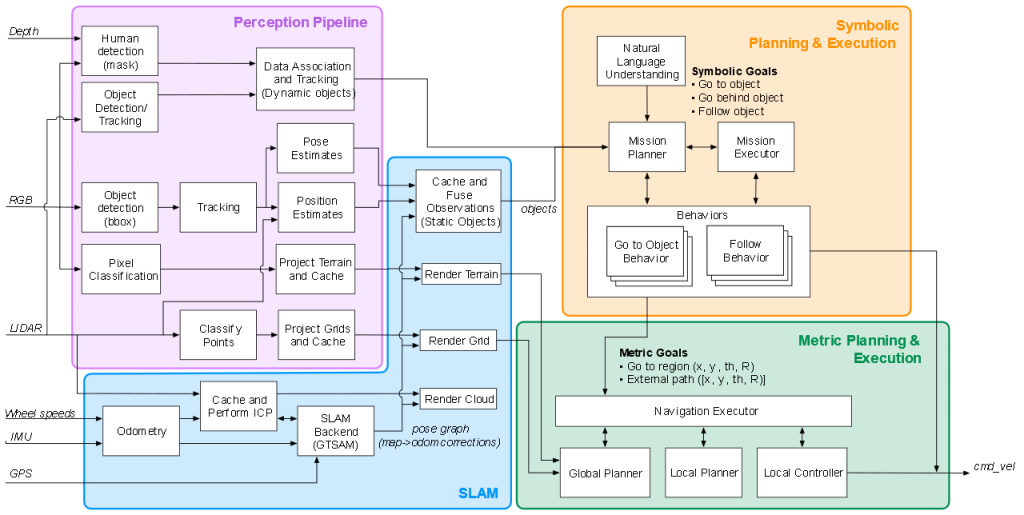

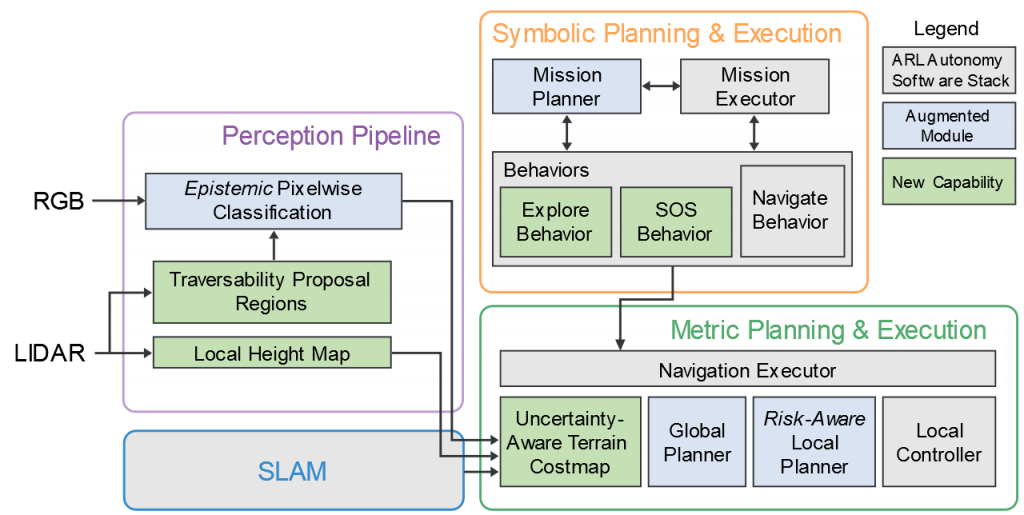

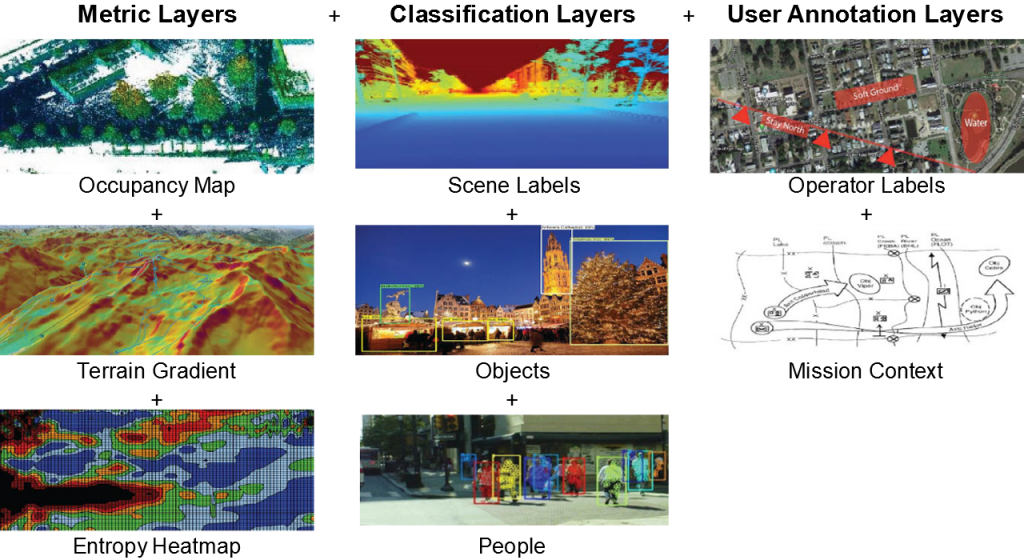

The ARL Autonomy Stack provides an implementation of the architecture and consists of four major capabilities:

- Perception pipeline: Take sensor data (e.g., RGB images and point clouds) and process to symbolic observations. Components include object detection, per-pixel image classification, object position/pose estimation based on LIDAR, etc.

- Simultaneous Localization and Mapping (SLAM): Using sensor data and perception pipeline products, formulate SLAM problem as a pose-graph optimization and solve. Includes components for point cloud alignment (ICP), pose-graph optimization (GTSAM), caching/data-association/fusion of symbolic object measurements, renderers of terrain classes/occupancy grids/point clouds.

- Metric Planning and Execution: Use metric model of the world to achieve metric goals (e.g., waypoint navigation). Includes components for global planning (e.g., lattice-based motion planning), local planning (e.g., trajectory optimization), and an executor to sequence planning and control.

- Symbolic Planning and Execution: Use symbolic model of the world to achieve symbolic goals (e.g., going near a particular object). Underlying symbolic planning architecture is based on behavior trees. Includes components for mission planning (e.g., the Planning and Acting using Behavior Trees), mission execution, sample behaviors that interface with mission planning/execution and the metric planning/execution layer (e.g., going to an object).

Contribution

Contributions to the existing architecture come in three possible ways:

- Replace an existing algorithm (i.e., node) with one that maintains the same input/output specifications. Experiments should then be conducted to show improved performance.

- Add an existing algorithm or capability to the existing system. Experiments should then be conducted to show augmented capability.

- Replace an existing capability (i.e., cluster of nodes) such that aggregate input/output specifications are maintained. Experiments should then be conducted to show maintained end-to-end performance and augmented capabilities.

To support this collaborative and cumulative engagement, software code developed under the SARA CRA will be added to the ARL Autonomy Stack Repository for use by current and future ARL and SARA sprint performers.

Sprint 2 Awards

Semantic Scene Perception and Active Planning for Navigation through Complex Vegetation (Massachusetts Institute of Technology)

Principal Investigators: Nicholas Roy, Jonathan How

Objective

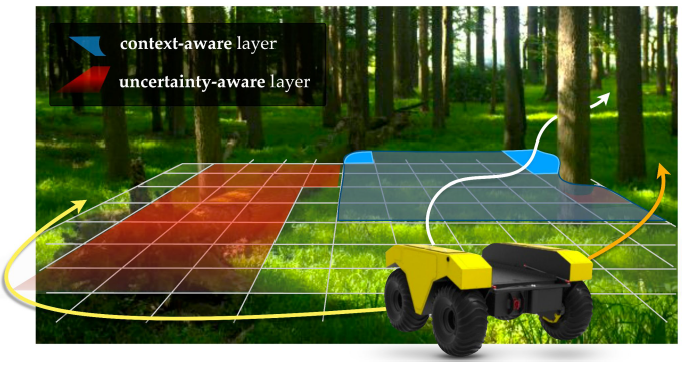

Navigation through complex and dense vegetation through semantic-, uncertainty-, and 3D-aware models of the environment.

ARL Autonomy Stack Context

Replace a geometry-based occupancy grid with a layered traversability costmap based on context-aware semantics, uncertainty-aware semantic perception, and 3D nature of terrain. Integrate this knowledge into new and existing planning algorithms in Phoenix.

Approach

- Learn to “fill in” gaps in knowledge to produce spatially consistent terrain map

- Capture 3D terrain features with multi-layer gridmap and voxel representations

- Develop local and global planning algorithms that ingest enhanced world models

Key Advancements

- Enhance costmaps by filling in unknown, occluded, and noisy regions with estimated costs, smoothing terrain inconsistencies, and capturing 3D terrain features, to enable accurate cost assignment of candidate motion plans

- Develop and/or enhance planners with knowledge of 3D terrain, uncertainty, and semantic context to enable robust traversal of complex terrain/vegetation

- Investigate the interplay of global and local planners to enable consistent progress toward goal as world model is updated

Learning Complex Terrain Maneuver from Demonstration (University of Washington)

Principal Investigators: Byron Boots, Dieter Fox, Sidd Srinivasa

Objective

Develop a set of tools for learning complex cost functions from human demonstrations and deliver a perception pipeline that appropriately informs behavior based on environmental context.

ARL Autonomy Stack Context

New algorithms for generating local costmaps from perceptual data

Approach

- Semantic terrain perception

- Learning costmaps from expert demonstrations

- Development, data collection, testing

Key Advancements

- Interpreting semantic representations of sensor data and terrain features directly into traversal costmap values

- Cost functions developed from learning expert behavior

- Integration of human preferences and mission context into a mission-dependent traversal cost

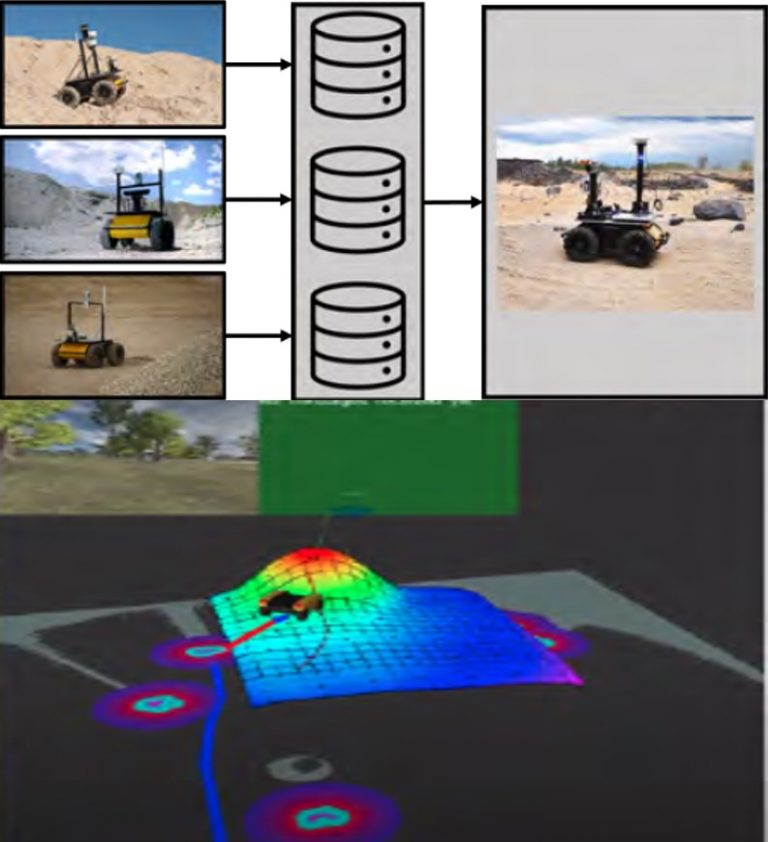

Adaptive and Uncertainty-Aware Perception for Off-road Navigation (Carnegie Mellon University)

Principal Investigators: Wenshan Wang, Sebastian Scherer

Objective

Enhance the autonomy of intelligent robots by learning a traversability map from interaction data, which takes into account both environment features and vehicle dynamics, and explicitly models the uncertainty based on multiple types of consistency.

ARL Autonomy Stack Context

- Perception algorithm that combines semantics, geometry, and vehicle dynamics

- Enhanced costmaps for terrain and dynamics aware planning

Approach

- Collect real-world interaction data

- Learn a dynamics-conditioned traversability map that includes environmental features (geometry and semantics) and dynamics features (robot pose and velocity)

- Estimate model uncertainty based on the temporal consistency and multi-modal consistency through model ensembles

- Exploit learned uncertainty to improve the safety and exploration efficiency during training

Key Advancements

- More accurate traversability map through dynamics and environment features

- Improved robustness of traversability estimation through terrain uncertainty estimates

Causal Inference Driven and Multi-Objective Decision Making for Cross-Domain Maneuver (Indiana University)

Principal Investigators: Lantao Liu

Objective

Enable UGV to learn traversability from sensor readings and to reason safe maneuver decisions from interacting with environments.

ARL Autonomy Stack Context

This project will contribute to off-road decision making (planning and control) and perception

Approach

- Collect real-world interaction data

- Learn a dynamics-conditioned traversability map that includes environmental features (geometry and semantics) and dynamics features (robot pose and velocity)

- Estimate model uncertainty based on the temporal consistency and multi-modal consistency through model ensembles

- Exploit learned uncertainty to improve the safety and exploration efficiency during training

Key Advancements

- More accurate traversability map through dynamics and environment features

- Improved robustness of traversability estimation through terrain uncertainty estimates

Sprint 1 Awards

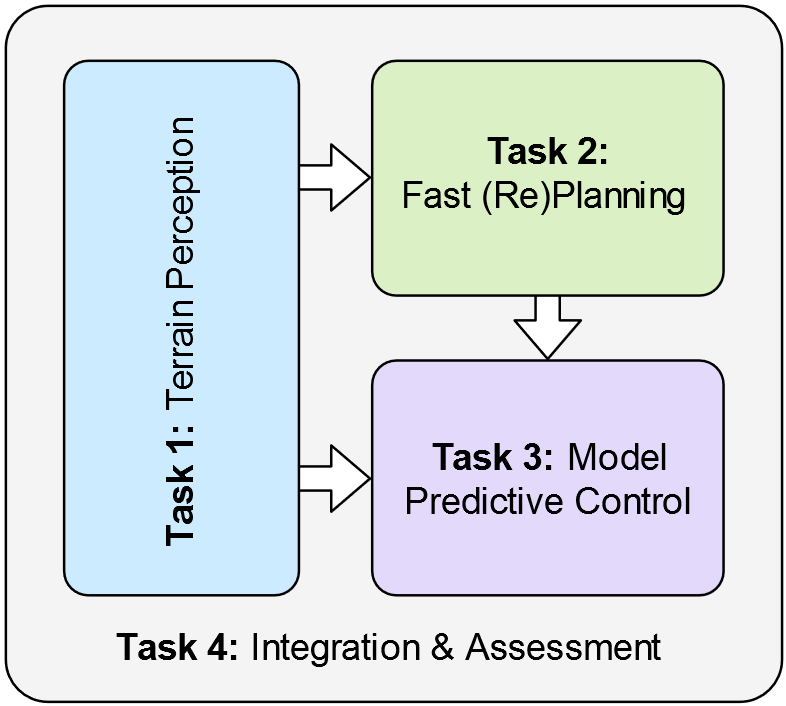

Safe, Fluent, and Generalizable Outdoor Autonomy (University of Washington)

Principal Investigators: Byron Boots, Siddhartha Srinivasa, Dieter Fox, with Panagiotis Tsiotras (Georgia Tech)

Objective

Deliver an integrated perception, planning, and control stack to enable fluent and safe robot operation in unprepared, uncertain, and dynamic environments.

ARL Autonomy Stack Context

Increase the mobility, resiliency, and operational tempo of ground maneuvers by integrating state-of-the-art perception, planning, and control algorithms into ARL’s ground mobile robot autonomy stack.

Approach

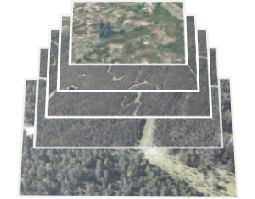

- Multiscale traversability maps from multimodal sensory inputs.

- Provably fast, anytime motion planning and replanning via lazy motion planners.

- Stochastic model predictive control for choosing near-optimal controls in real-time subject to constraints.

- Tight integration: perception directly informs planning, perception and planning directly inform control.

- Demonstration: algorithms deployed on the ARL stack and demonstrated on physical robots.

Key Advancements

- Perception that provides terrain features and traversability information to planning and control modules.

- Efficient lazy, global planning and replanning capabilities integrated with the control module.

- Fast model predictive control for obstacle avoidance and efficient maneuver over a variety of terrain.

- Integration and assessment of the above tasks within the ARL ground autonomy stack.

Uncertainty-Aware Autonomous Navigation (GE Research)

Principal Investigators: Dr. Shiraj Sen, Dr. Nurali Virani, Dr. Mohammed Yousefhussien, Dr. Steven Gray

Objective

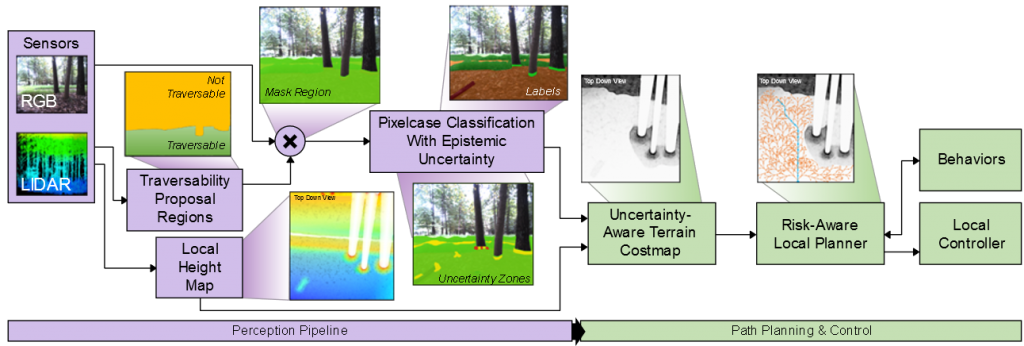

Improving ground maneuver speed and robustness through incorporation of uncertainty in machine learned models

ARL Autonomy Stack Context

- Augmented capability: pixelwise classification with uncertainty quantification, risk-aware local planner

- Add additional capabilities for costmap generation, traversability region computation, and behaviors

Approach

- Developing a justification framework that uses support from training (or fine-tuning) instances in two or more layers to construct epistemic uncertainty for the model’s output or belief

- Layered cost map and utility function that fuses epistemic uncertainty in pixelwise scene segmentation with geometric terrain data

- Risk-aware path planner to plan trajectories and execute behaviors that either avoid uncertain regions, or deliberately explore such regions, based on the risk tolerance

Key Advancements

- Novel approach to handling uncertainty in perception

- Risk-aware path planner

- ROS modules that either extend or add additional capability to the ARL Autonomy stack

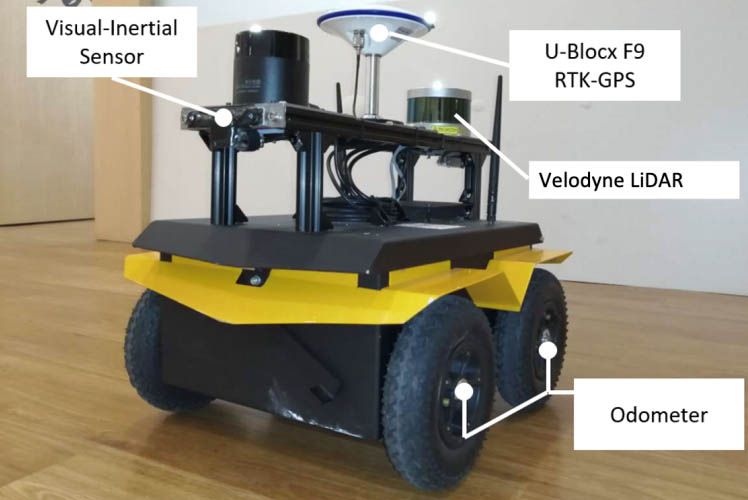

Resilient, Resource-adaptive Multi-sensor Fusion for Persistent Navigation (University of Delaware)

Principal Investigators: Guoquan (Paul) Huang and Herbert Tanner

Objective

Develop resource-adaptive multi-modal sensor fusion and state estimation algorithms for long-term, large-scale, robust localization and mapping, enabling persistent autonomous navigation.

ARL Autonomy Stack Context

- Sensors/calibration: Augment spatial/temporal multi-sensor calibration capabilities

- State estimation/odometry: Develop new multi-sensor odometry modules

- Mapping/: Improve SLAM by incorporating multi-modal measurements

- Planning/arl-navigation: Endow local planner with resource adaptiveness

Approach

- Motion-induced multi-sensor spatiotemporal calibration

- Multi-modal localization with online calibrationVisual-inertial-wheel odometry (VIWO)

- LiDAR-inertial-camera odometry

- Intermittent GPS-aided VIWO

- Optimal motion strategies under resource constraints

Key Advancements

- Unified multi-sensor calibration framework

- A set of consistent multi-modal odometry algorithms with online sensor calibration

- Optimal motion planning for active sensing, motion-based calibration

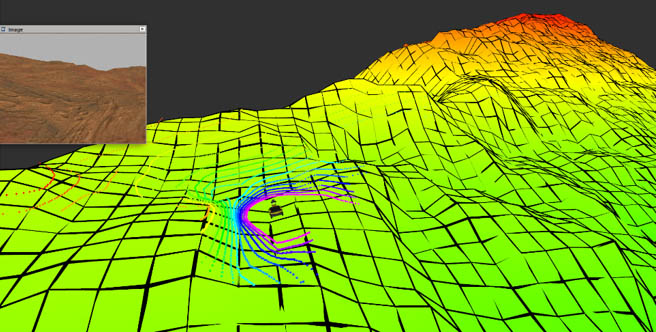

Efficiently Adaptive State Lattices for Robust Guidance, Navigation and Control of Unmanned Ground Vehicles (University of Rochester)

Principal Investigator: Thomas Howard

Objective

Develop new algorithms for model-predictive motion planning, navigation, and control that continuously refine and improve the efficiency of paths through environments with dynamic obstacles.

ARL Autonomy Stack Context

Improve the efficiency and solution quality of global planning in the metric planning and execution components

Approach

- Generate global motion plans with the Efficiency Adaptive State Lattice, which incorporates concepts from selective adaptation in Adaptive State Lattices with iterative refinement of recombinant motion planning search space over multiple planning queries.

- Relax the parameterized solution to find the most efficient motion in the homotopy class of the planned motion.

- Follow the optimized maneuver using the Receding Horizon Model-Predictive Controller to navigate paths that may exhibit path singularities and discontinuities.

Key Advancements

Quantitively improve the relative optimality and efficiency of heuristic search for mobile robot motion planning in off-road environments relative to suitable benchmarks for mobile robot motion planning, navigation, and control with recombinant motion planning search spaces.

Self-reflective Robot Adaptation to Unstructured Terrain for Off-road Ground Maneuver (Colorado School of Mines)

Principal Investigator: Hao Zhang

Objective

Develop a new ground robot capability of self-reflective adaptation to unstructured terrain for all-terrain ground maneuver in off-road environments, through an innovative joint learning approach that learns terrain representation and maneuver control conditioned on robot self-awareness.

ARL Autonomy Stack Context

This project will contribute to local maneuver planning and control.

Approach

- In order to enable terrain-aware maneuver adaptation, the proposed approach computes an offset according to the robot’s monitoring and representation of terrain, and uses the offset to compensate robot maneuver control for complex combinations of terrain changes in real time.

- In order to enable self-reflective maneuver adaptation, the approach modulates the parameters of a maneuver model according to the awareness of the ground robot itself.

Key Advancements

- This project will enable improved adaptive behaviors for autonomous ground maneuver and terrain aware navigation through unprepared off-road environments.

- This project will create algorithms for increased resiliency in terrain aware navigation to increase robustness to wide variations in environmental conditions.

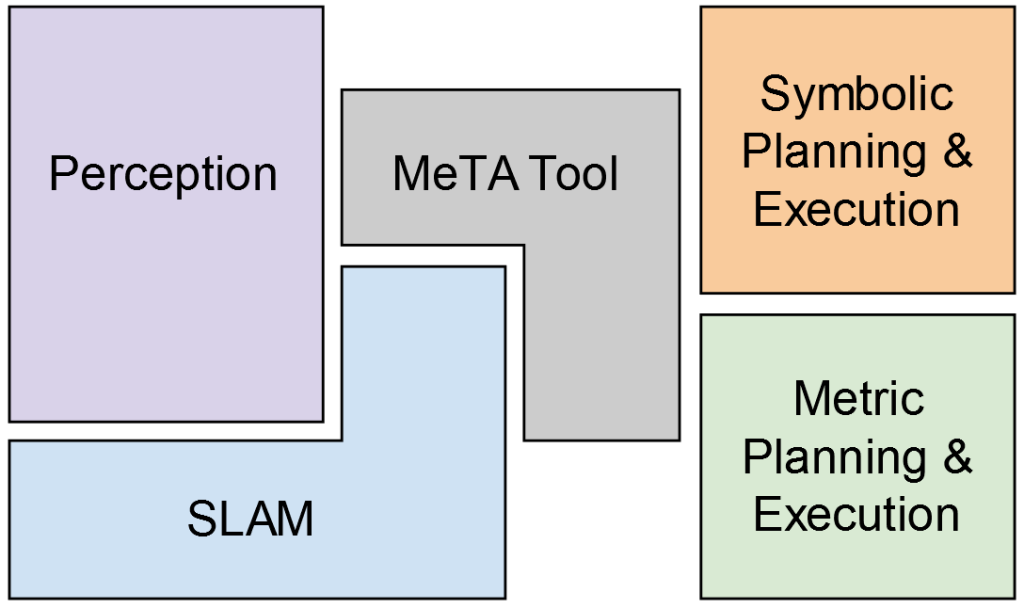

Enhancing Unmanned Maneuver through Mission-based Traversability Assessment (MeTA) Tool (Florida Institute for Human and Machine Cognition)

Principal Investigators: Robert Griffin, Matt Johnson, with Hakki Erhan Sevil (University of West Florida)

Objective

Filter a multi-layer traversability map based on specific vehicle and mission parameters for navigation plans in increasingly complex contexts and terrains.

ARL Autonomy Stack Context

- Take inputs from Perception and SLAM modules in the ARL Autonomy Stack

- Provide traversability inputs to the planning module

Approach

- Pack localized data from the SLAM and perception modules into an octree

- Design a readily extensible context-layer structure to allow the incorporation of multiple contexts

- Design the first context layer as the metric layer that includes traversability metrics such as roll-over angle

- Use a simplified path planner to demonstrate the usefulness of the traversability map

Key Advancements

- Readily extensible framework for considering different planning contexts as the mission requires

- Improved framework for navigation using these advanced contexts can include components such as semantically classified data and user annotations

- Framework for incorporation of elements such as data entropy for planning purposes

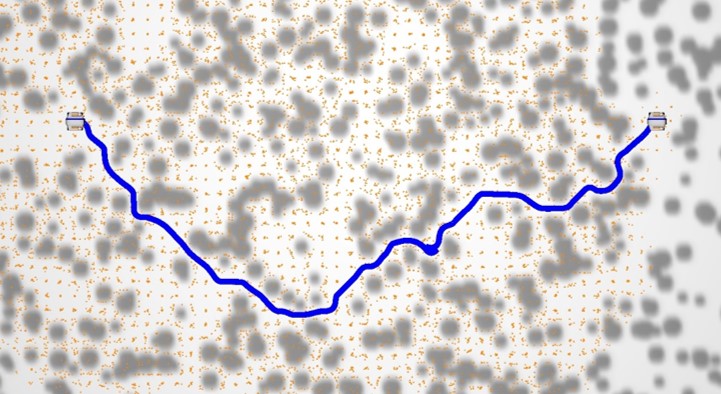

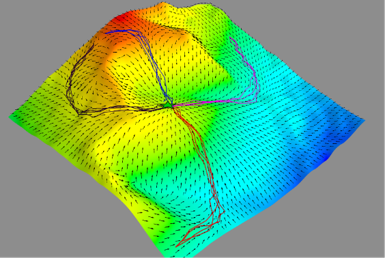

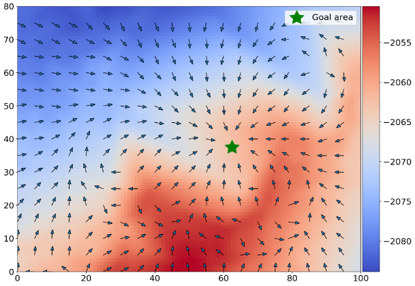

2.5D Terrain Adaptive Decision-Theoretic Planning for Autonomous Vehicles (Indiana University)

Principal Investigator: Lantao Liu

Objective

Develop a continuous-state based decision-making framework that allows vehicles to plan and navigate through complex 2.5 (even 3) Dimensional terrains.

ARL Autonomy Stack Context

Develop and integrate novel planning algorithms to enable resilient, terrain-aware navigation with dynamic obstacle avoidance over long distances of unstructured environments

Approach

- We propose a principled kernel-based policy iteration algorithm to solve the continuous-state Markov Decision Processes (MDPs).

- By combining the kernel representation of value function, we design an efficient policy iteration algorithm whose policy evaluation step can be represented as a linear system of equations characterized by a finite set of supporting states.

Key Advancements

- In contrast to most decision-theoretic planning frameworks which assume fully known state transition models, we design a method that eliminates such a strong assumption which is oftentimes extremely difficult to engineer in reality.

- Our supporting-state based method also alleviates the need for heavily searching in continuous/large state space and thus is adaptive to environments and requires small computational time.

High-speed and reactive planning, localization, perception and navigation for aerial robots in dynamic off-road environments (University of California, Berkeley)

Principal Investigators: Mark W. Mueller, Koushil Sreenath, and Avideh Zakhor

Objective

Improve autonomous mobility and maneuverability of an aerial robot through combination of high-speed, reactive local planning, multi-scale state estimation, and object detection via EO/IR fusion.

ARL Autonomy Stack Context

- Add multi-scale state estimation (enhances position state estimator); thin object detector

- Replace path planning and position control with high-speed agile local planner

Approach

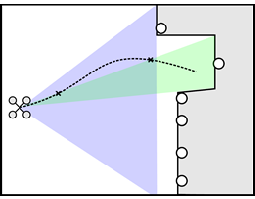

- Generate dynamically feasible local trajectories using a memoryless local planner directly in the depth image output of embedded sensors. MPC-like approach enables reactive & robust performance.

- Fuse downward-facing images with onboard multi-scale 3D terrain database for estimating pose at moderate altitudes.

- Fuse forward facing visible and thermal images to detect objects and thin obstacles at medium and long range. Use state-of-the art deep-learning approaches and sensor technology.

Key Advancements

- Tightly integrated and validated algorithms and hardware design, allowing for high-speed autonomous aerial maneuvers.

- Ability to detect and avoid obstacles typical to off-road scenarios.

- Reliable global state estimation under GPS-denied conditions